This post was originally published on this site

Artificial intelligence will bring enormous benefits to society and the economy by amplifying our own intelligence and creativity as well as giving us a critical tool for overcoming the challenges that lie ahead.

However, AI has a dark side.

One of the most important dangers is the emergence of deepfakes—high quality fabrications that be put to a variety of malicious uses with the potential to threaten our security or even undermine the integrity of elections and the democratic process.

In July 2019, the cybersecurity firm Symantec revealed that three unnamed corporations had been bilked out of millions of dollars by criminals using audio deepfakes. In all three cases, the criminals used an AI-generated audio clip of the company CEO’s voice to fabricate a phone call ordering financial staff to move money to an illicit bank account.

Because the technology is not yet at the point where it can produce truly high-quality audio, the criminals intentionally inserted background noise (such as traffic) to mask imperfections.

However, the quality of deepfakes is certain to get dramatically better in the coming years, and eventually will likely reach a point where truth is virtually indistinguishable from fiction.

Deepfakes are often powered by an innovation in deep learning known as a “generative adversarial network,” or GAN. GANs deploy two competing neural networks in a kind of game that relentlessly drives the system to produce ever higher quality simulated media.

For example, a GAN designed to produce fake photographs would include two integrated deep neural networks. The first network, called the “generator,” produces fabricated images. The second network, which is trained on a dataset consisting of real photographs, is called the “discriminator.”

This technique produces astonishingly impressive fabricated images. Search the internet for “GAN fake faces” and you’ll find numerous examples of high-resolution images that portray nonexistent individuals.

Generative adversarial networks also can be deployed in many positive ways. For example, images created with a GAN might be used to train the deep neural networks used in self-driving cars or to use synthetic non-white faces to train facial recognition systems as a way of overcoming racial bias.

GANs also can provide people who have lost the ability to speak with a computer-generated replacement that sounds like their own voice. The late Stephen Hawking, who lost his voice to the neurodegenerative disease ALS, or Lou Gehrig’s disease, famously spoke in a distinctive computer synthesized voice. More recently, ALS patients like the NFL player Tim Shaw have had their natural voices restored by training deep learning systems on recordings made before the illness struck.

However, the potential for malicious use of the technology is inescapable and, as evidence already suggests for many tech-savvy individuals, irresistible.

An especially common deepfake technique enables the digital transfer of one person’s face to a real video of another person. According to the startup company Sensity (formerly Deeptrace), which offers tools for detecting deepfakes, there were at least 15,000 deepfake fabrications posted online in 2019, an 84% increase over the prior year. Of these, 96% involved pornographic images or videos in which the face of a celebrity—nearly always a woman—is transplanted onto the body of a pornographic actor.

While celebrities like Taylor Swift and Scarlett Johansson have been the primary targets, this kind of digital abuse could eventually be used against anyone, especially as the technology advances and the tools for making deepfakes become more available and easier to use.

A sufficiently credible deepfake could quite literally shift the arc of history—and the means to create such fabrications might soon be in the hands of political operatives, foreign governments or just mischievous teenagers.

Beyond videos or sound clips intended to attack or disrupt, there will be endless illicit opportunities for those who simply want to profit. Criminals will be eager to employ the technology for everything from financial and insurance fraud to stock-market manipulation. A video of a corporate CEO making a false statement, or perhaps engaging in erratic behavior, would likely cause the company’s stock to plunge.

Deepfakes will also throw a wrench into the legal system. Fabricated media could be entered as evidence, and judges and juries may eventually live in a world where it is difficult, or perhaps impossible, to know whether what they see before their eyes is really true.

To be sure, there are smart people working on solutions. Sensity, for example, markets software that it claims can detect most deepfakes. However, as the technology advances, there will inevitably be an arms race—not unlike the one between those who create new computer viruses and the companies that sell software to protect against them—in which malicious actors will likely always have at least a small advantage.

Basic Books

Ian Goodfellow, who invented GANs and has devoted much of his career to studying security issues within machine learning, says he doesn’t think we will be able to know if an image is real or fake simply by “looking at the pixels.” Instead, we’ll eventually have to rely on authentication mechanisms like cybernetic signatures for photos and videos.

Perhaps someday every camera and mobile phone will inject a digital signature into every piece of media it records. One startup company, Truepic, already offers an app that does this. The company’s customers include major insurance companies that rely on photographs from their customers to document the value of everything from buildings to jewelry.

Still, Goodfellow thinks that ultimately there’s probably not going to be a foolproof technological solution to the deepfake problem. Instead, we will have to navigate within a new and unprecedented reality where what we see and what we hear can always potentially be an illusion.

The upshot is that increased availability and reliance on artificial intelligence will come coupled with systemic security risk. This includes threats to critical infrastructure and systems as well as to the social order, our economy and our democratic institutions.

Security risks are, I would argue, the single most important near-term danger associated with the rise of artificial intelligence. It is critical that we form an effective coalition between government and the commercial sector to develop appropriate regulations and safeguards before critical vulnerabilities are introduced.

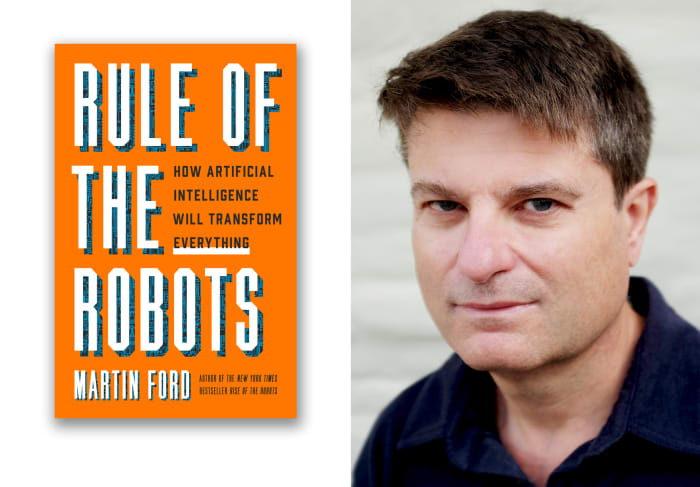

Martin Ford is the author of “Rule of the Robots; How artificial intelligence will transform everything,” from which this is adapted.